UX Research Portfolio: One-Page "Analysis-only" case study — Quick Wins

Most important actions for advisors — Concept Testing (Analysis with AI ✨)

Challenge

Advisors and their staff begin each day by piecing together action items across CRM, client accounts, email, calendars, and alerts, consuming significant time and effort and frequently resulting in missed, quick-win growth opportunities.

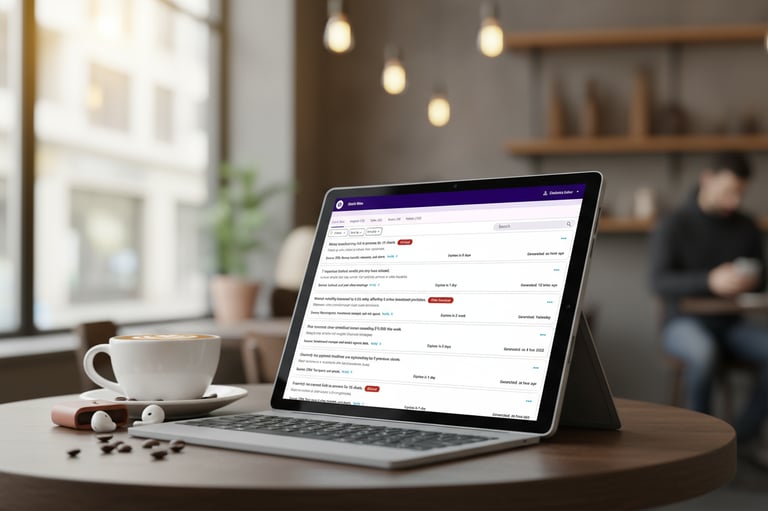

Solution

Provide a unified daily view that surfaces the top ten quick-win growth opportunities, generated through intelligent analysis of client data across 50+ company products and reports.

My Role

Planned research, set goals & scope

Defined participants (advisors, staff, support desk)

Facilitated moderated concept tests

Synthesized findings

Presented actionable recommendations; closed feedback loop with the Design and Development team

Stakeholders & Audience

Product Owners

Experienced Advisors and staff

Research Goals

What do advisors expect to see when identifying their top action items?

How do advisors perceive the concept of quick wins in their workflow?

To what extent does the quick wins concept align with advisors’ mental models?

What actions do advisors intend to take upon seeing quick wins?

Timeline & Methods

Accelerated turnaround: 8 days from plan to report readout.

Moderated one-on-one concept testing (remote, screen sharing)

Analysis

Timeline: Approximately 3 hours for 7 sessions.

Process

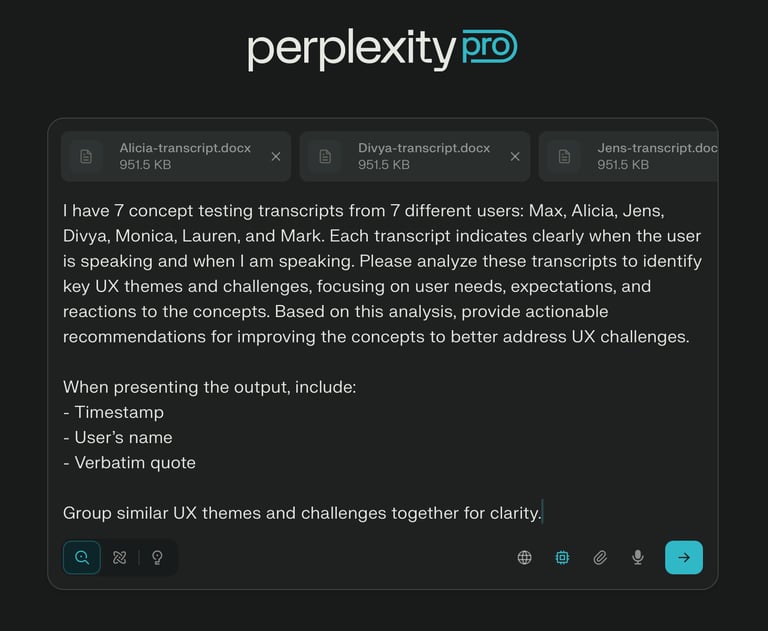

Download transcripts in Word format.

Upload transcripts to Perplexity and provide a detailed prompt.

Manually verify the AI-generated outputs.

Synthesize key findings and compile recommendations.

Prompt that generated the output

Objective

Analyze 7 concept-testing transcripts (users: Max, Alicia, Jens, Divya, Monica, Lauren, Mark) to extract UX themes, challenges, user needs/expectations, reactions to concepts, and produce actionable recommendations, with traceable evidence via timestamps, speaker name, and verbatim quotes.

Inputs

Seven interview transcripts, each marking who is speaking (User vs. Researcher/Interviewer) and including timestamps.

Constraints

Use only content from...

Key Findings

Advisors resonated with quick wins but need more control, visibility, and delegation across workflows.

Quick wins alignment

Quick wins matched advisors’ mental model for immediate actions, though several expected more customization of what counts as a quick win.

Field requested toggles and filters to tailor quick wins to their workflow and priorities.

Past‑due visibility

Advisors expected past‑due items to appear alongside urgent quick wins to avoid blind spots across their action list. Verbatim: “Is there an option…to pick past due actions? I would expect to include or exclude those from the quick wins.”

Assignment and tracking

Advisors want to assign urgent items to staff, set ownership, and track progress to completion from the same view.

Status signals (assigned, in progress, blocked, done) and handoff clarity were emphasized for accountability.

Unified critical signals

Advisors want important actions surfaced from email, CRM, and pending money transfers so they aren’t lost in noisy notification streams.

A single, deduplicated feed of critical actions with source context and due dates was requested to reduce misses.

Recommendations

Customize quick wins: Add controls to tune the quick wins algorithm with user feedback loops (approve/deny, snooze, reclassify) and weighting preferences for action types, sources, and due‑date sensitivity.

Filtering: Provide filters to include/exclude action types, source systems (email, CRM, transfers), owners, status, and priority.

Quick wins content suggestions:

Money transfers (in/out), including holds and pending items.

Failed trades or trades not executed as intended.

Corrections and adjustments requiring advisor review.

Missing, incorrect, or outdated client information.

Failed or skipped recurring transactions.

Suspicious or anomalous activity with risk indicators.

Impact

Validated Business Use Cases: Confirmed key quick-win use cases and helped the business define build stories.

Faster Research Turnaround: Tested concepts with the field and delivered actionable insights to product teams within 8 days, just before tech resources were assigned.

Reflection

Using AI as a co-assistant: Leveraging an AI assistant to quickly analyze seven concept tests proved worthwhile.

Manual verification necessary: AI can sometimes hallucinate or merge different themes, as well as distort verbatims. Manual checks ensured quality, caught errors efficiently, and prevented wasted effort.

Case Study at a Glance

Current: Field is overwhelmed by emails, alerts, and nudges, often missing easy low-hanging opportunities.

Future: Field can log in and see prioritized actions upfront, making it easy to focus and respond.

CONNECT

Chaitanya.gaikar07@gmail.com

© 2025. All rights reserved.